DDoS targets english ISP

Title

ISP hit by 2-day DDoS used FLOWCUTTER to pinpoint and neutralize attack vectors.

Situation

ISP in the UK was targeted by a series of DDoS attacks – to be exact, 20 times within two days.

Each attack resulted in overloaded edge routers. During the ~1h+ attacks, all the providers’ customers were experiencing internet outages.

On the morning of the third day, a technician contacted and installed FLOWCUTTER to see if it can help.

Challange

Most attacks are short and can be waited out until it’s over. This series of attacks rendered ISP operation impossible. Hot line ringing with angry customers the whole two days.

After trying painfully to find relevant information in SNMP monitoring, the administrator decided to be prepared for the next time – to understand the attack:

- What method was used to attack

- Who is an attacker

- What exactly is the target

Solution

This is exactly where netflow data comes in handy. FLOWCUTTER netflow analysis is a perfect fit for such a situation.

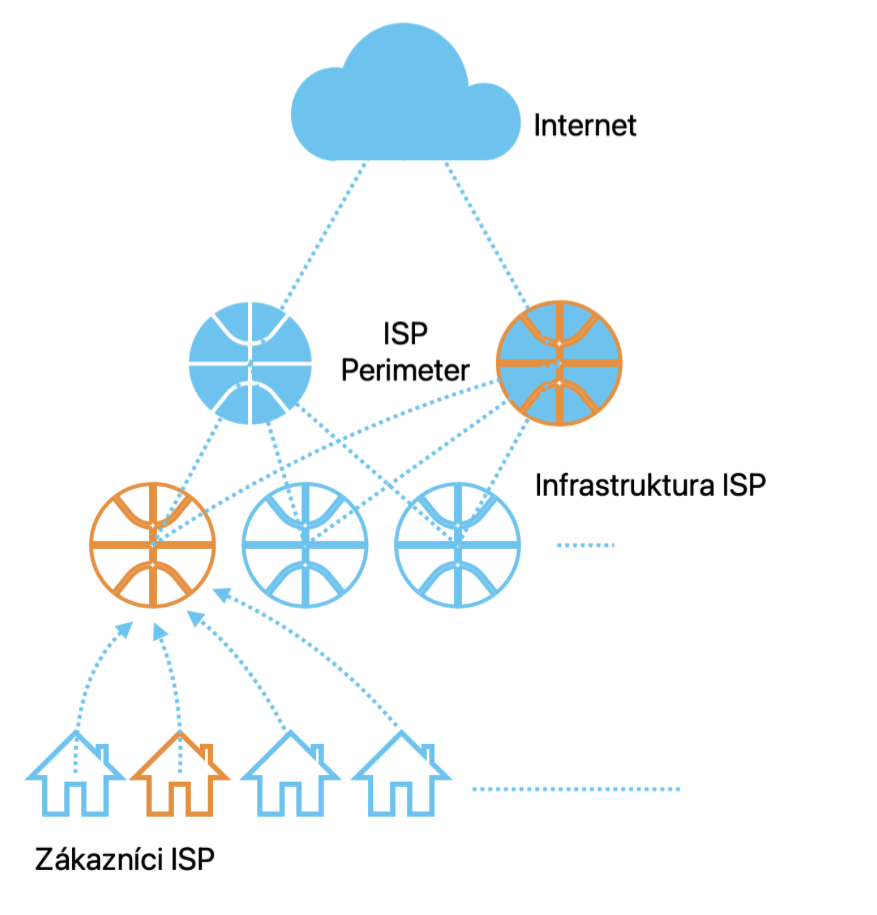

ISP set up netflow export from edge routers to FLOWCUTTER were able to analyse the attack and consequently mitigate it.

Results

See the finding of this particular instance of a distributed DoS attack.

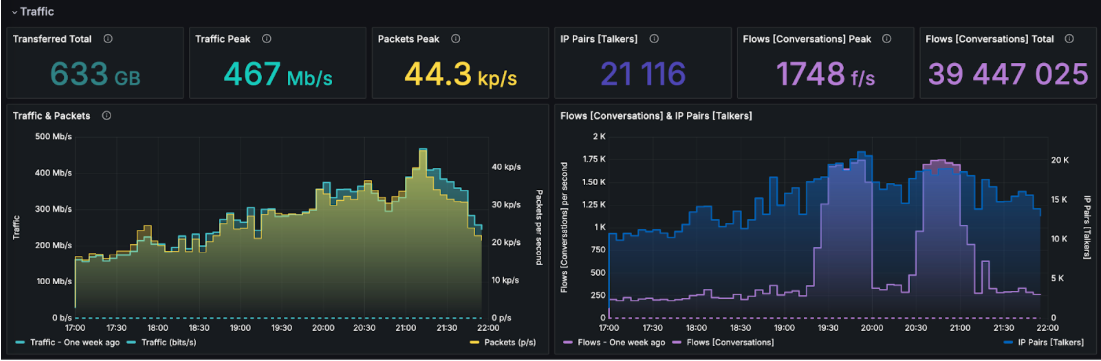

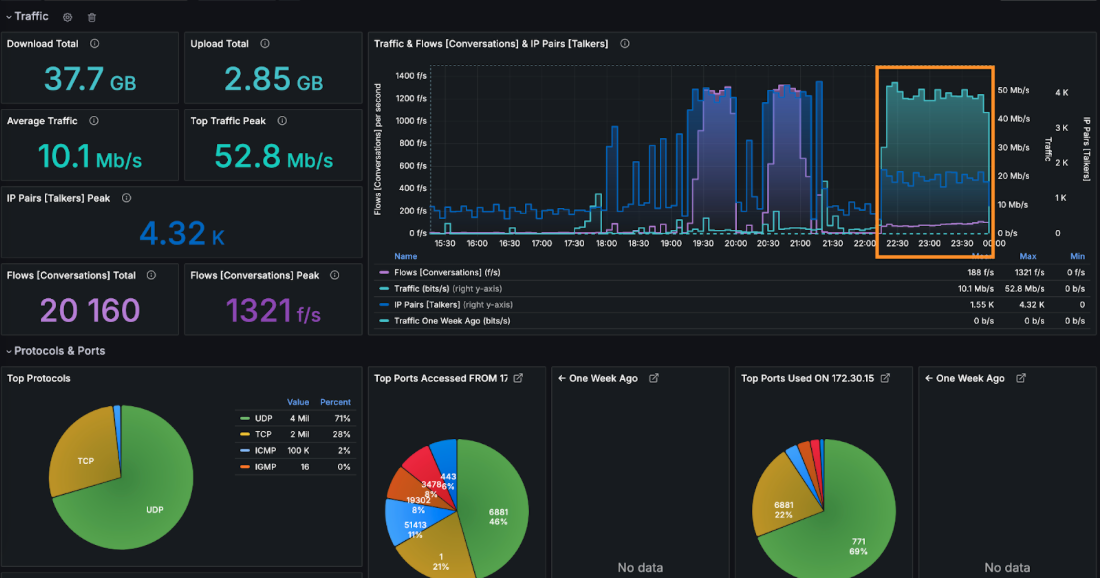

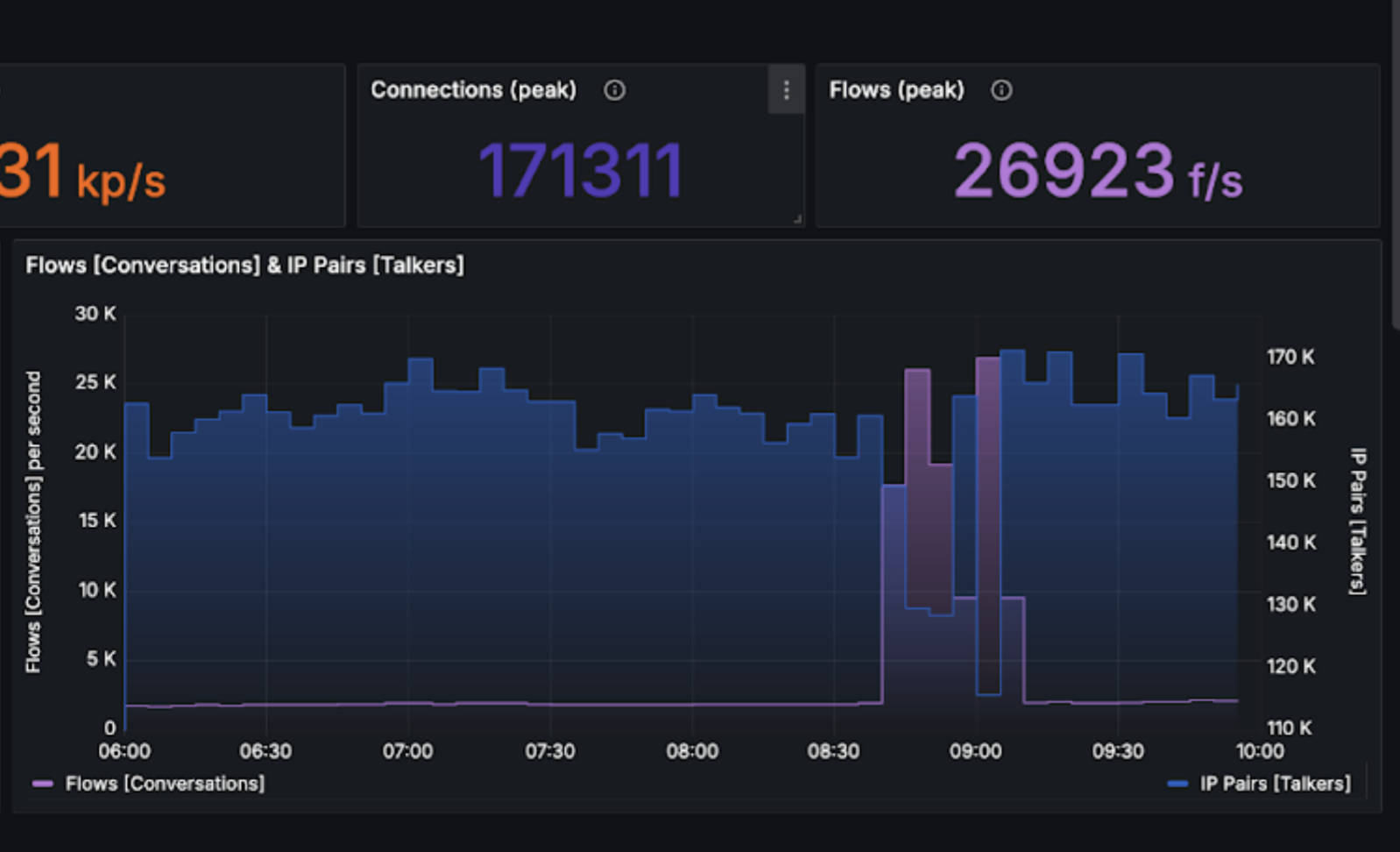

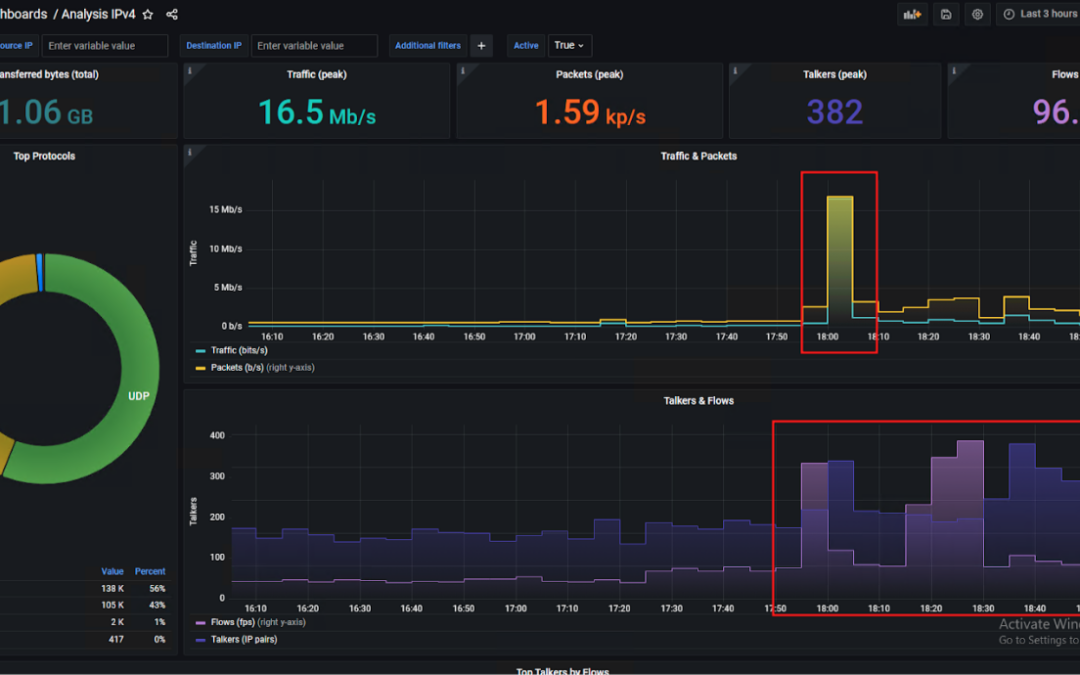

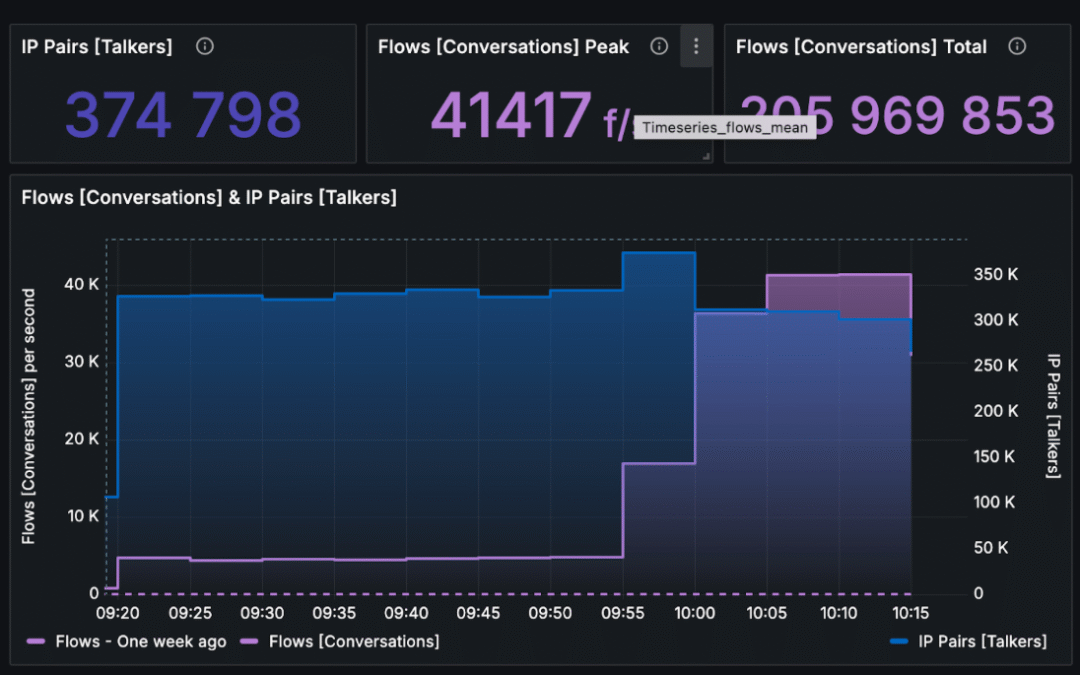

The operator was able to observe an anomaly within incoming connections (flows per second). It raised 25 times when compared to normal behavior before an attack.

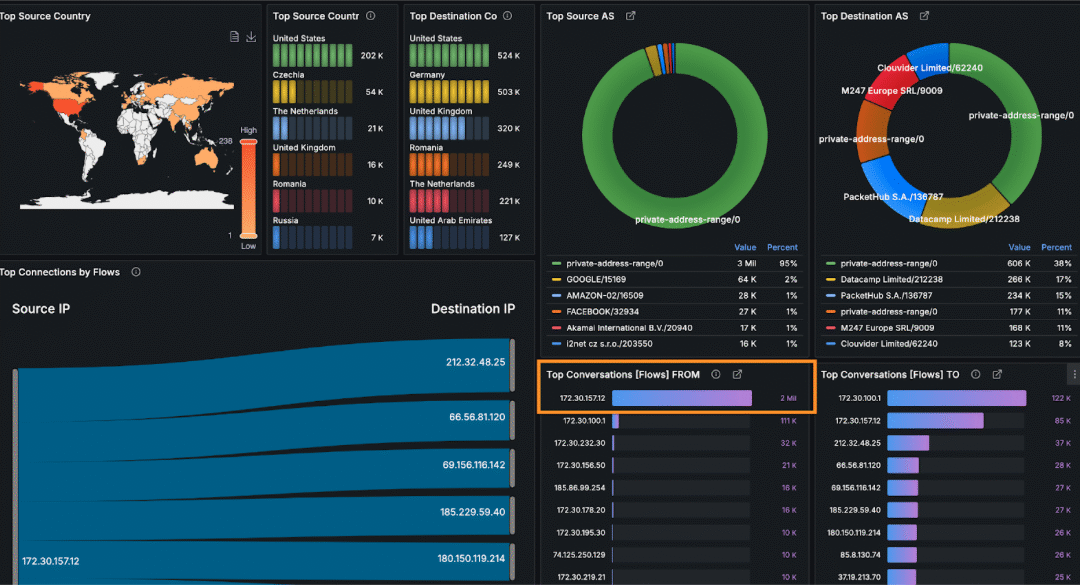

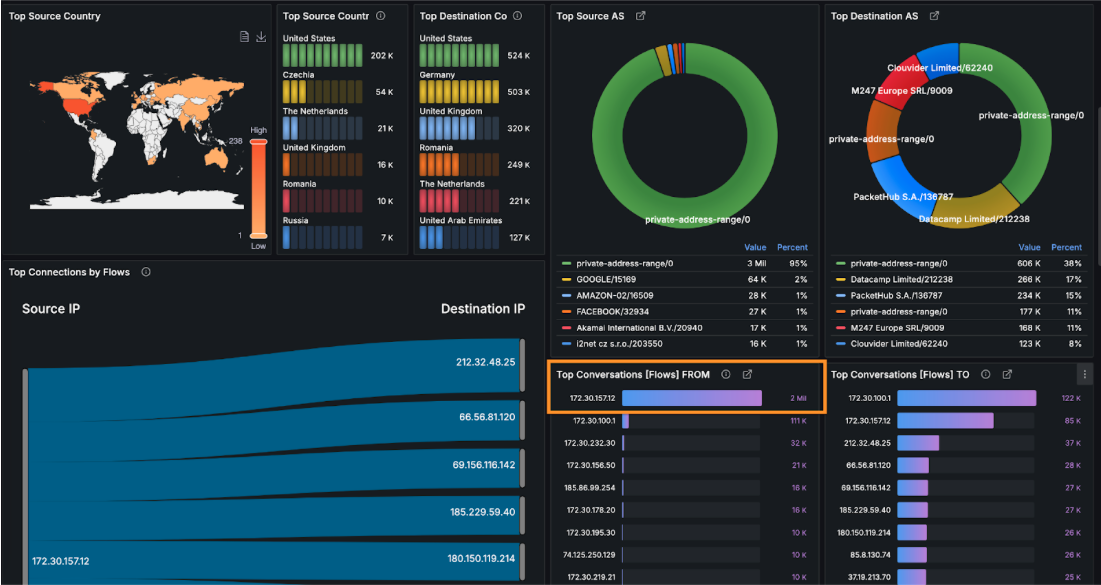

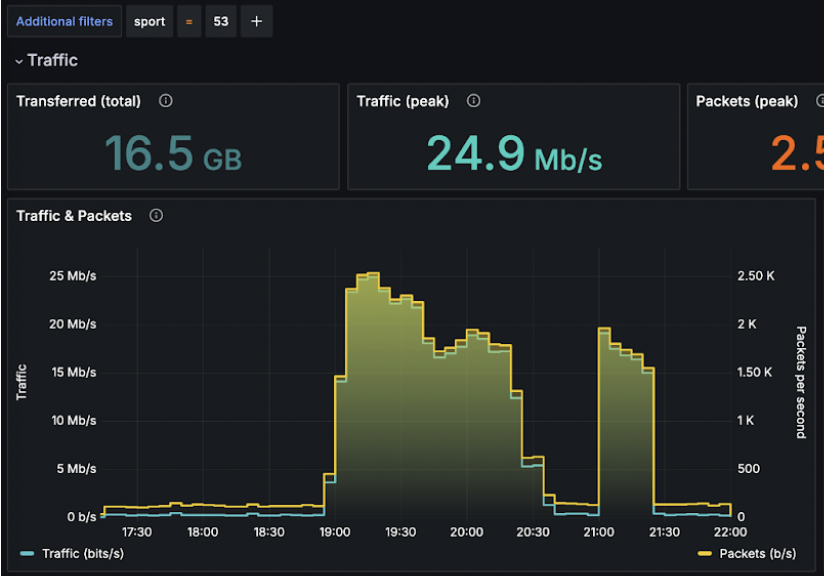

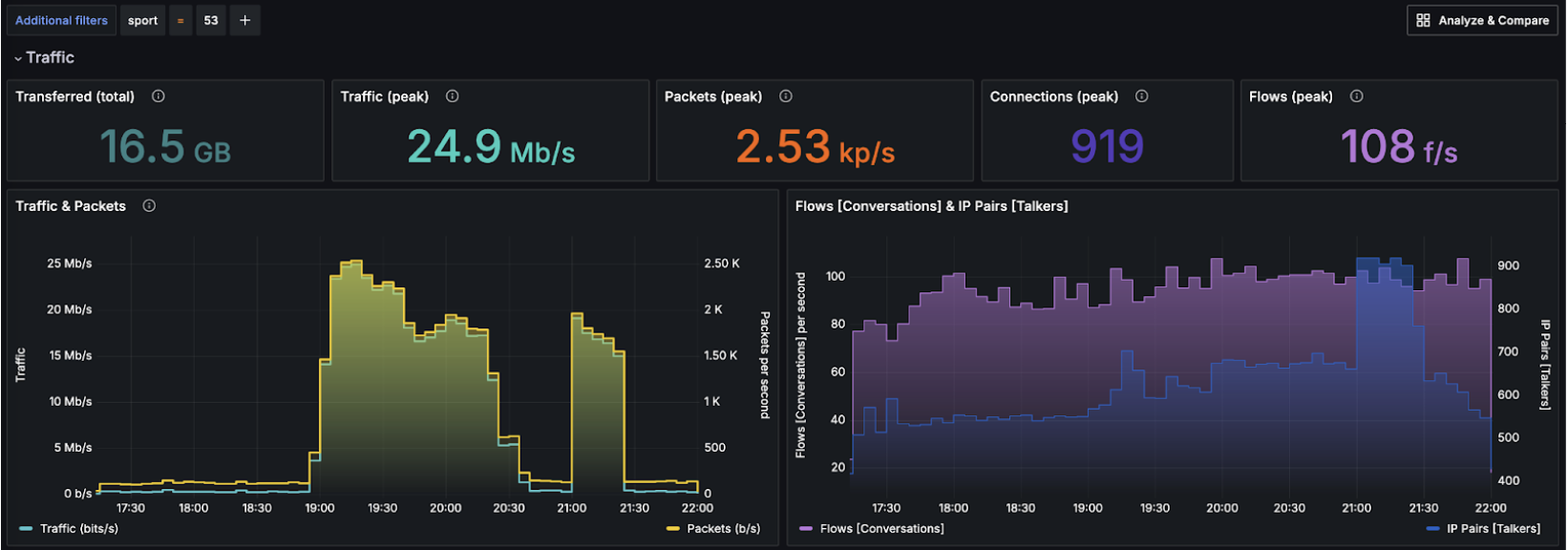

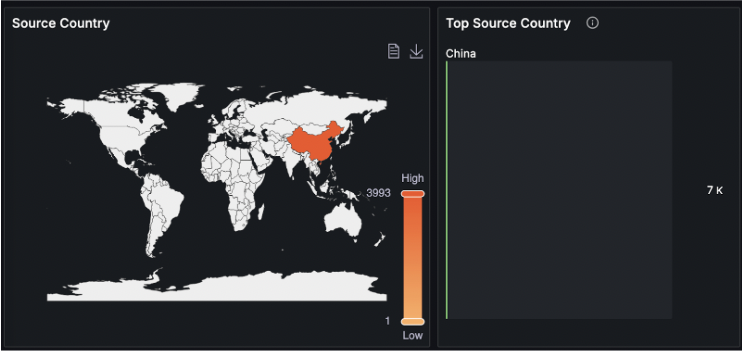

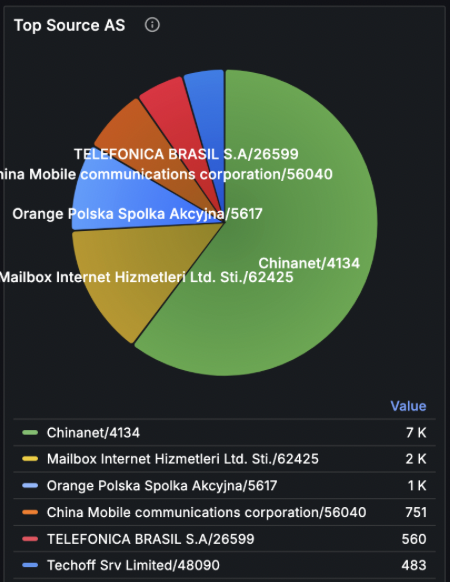

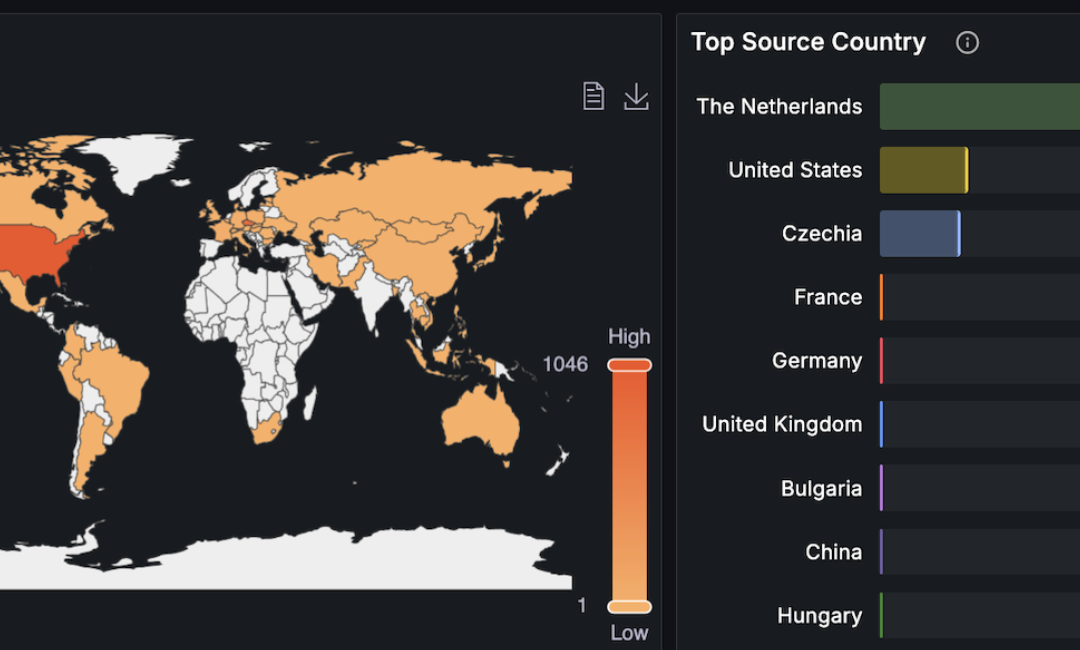

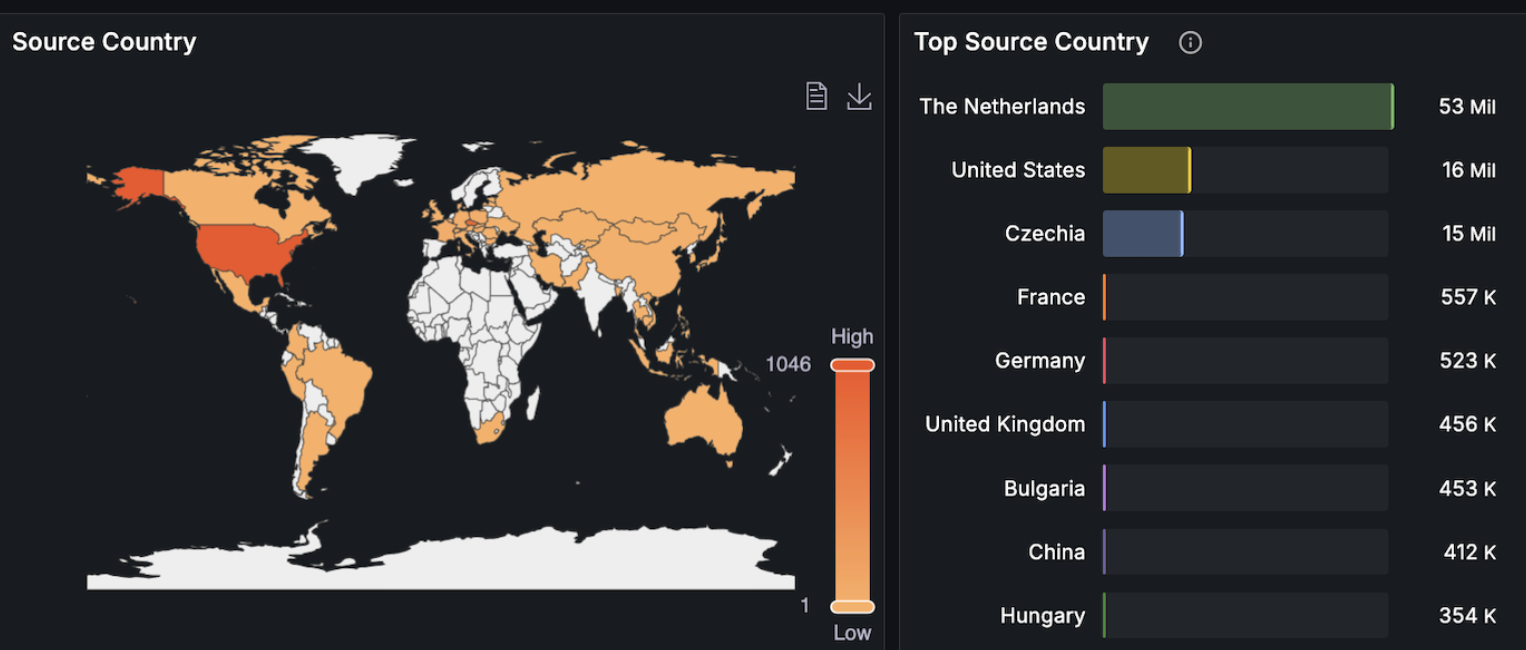

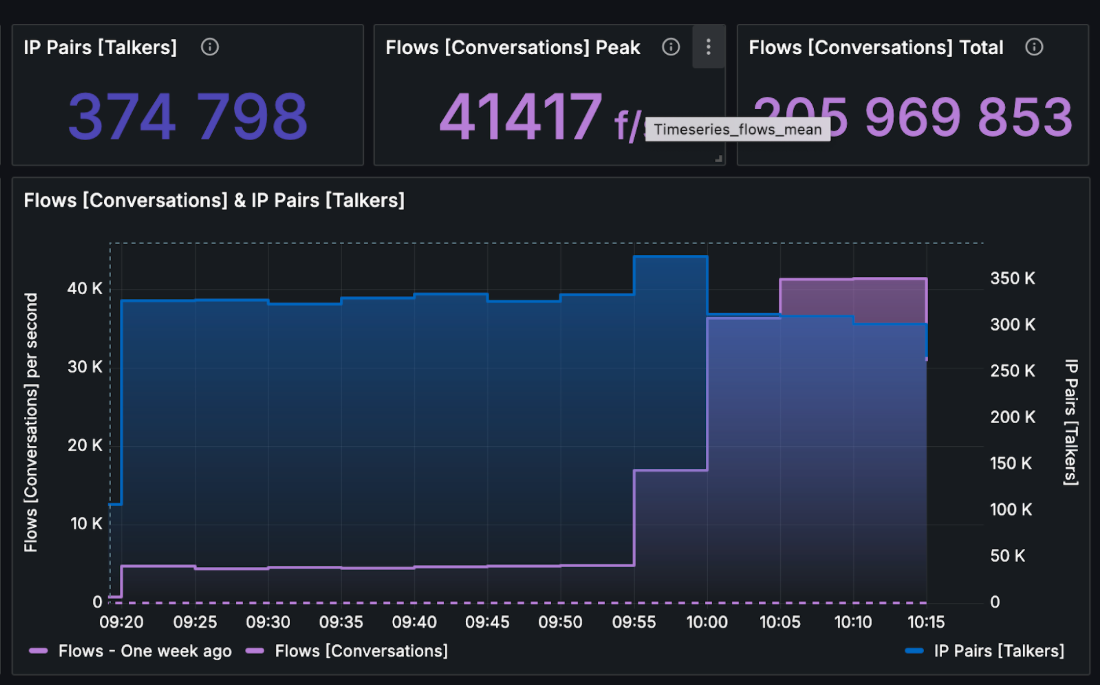

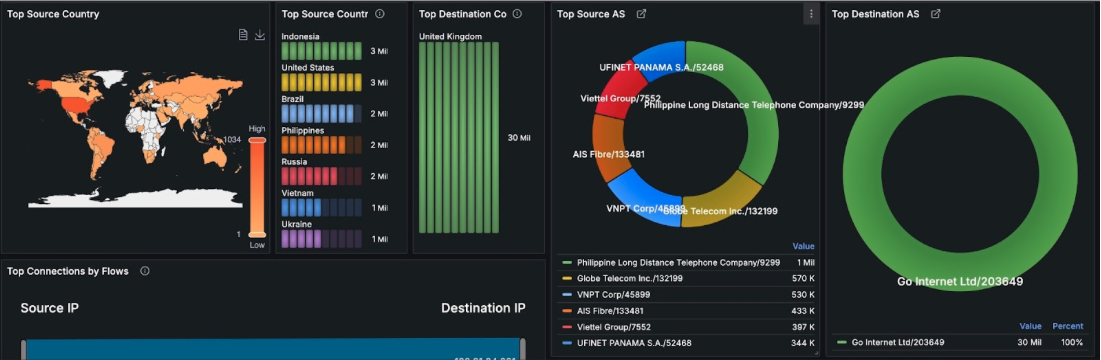

When the operator zoomed in on the attack, he was able to clearly see the vector. Unfortunately, this was no easy situation – the attack was fully distributed. It means it didn’t come from a few IPs or ASN. It came from dozens of thousands of hosts all around the world.

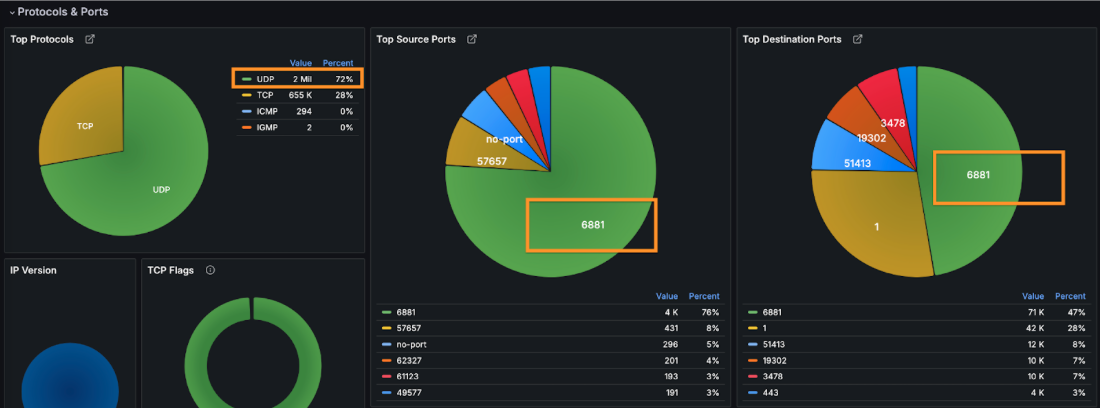

Moreover, the incoming packets used UDP protocol, and didn’t originate or target any specific ports.

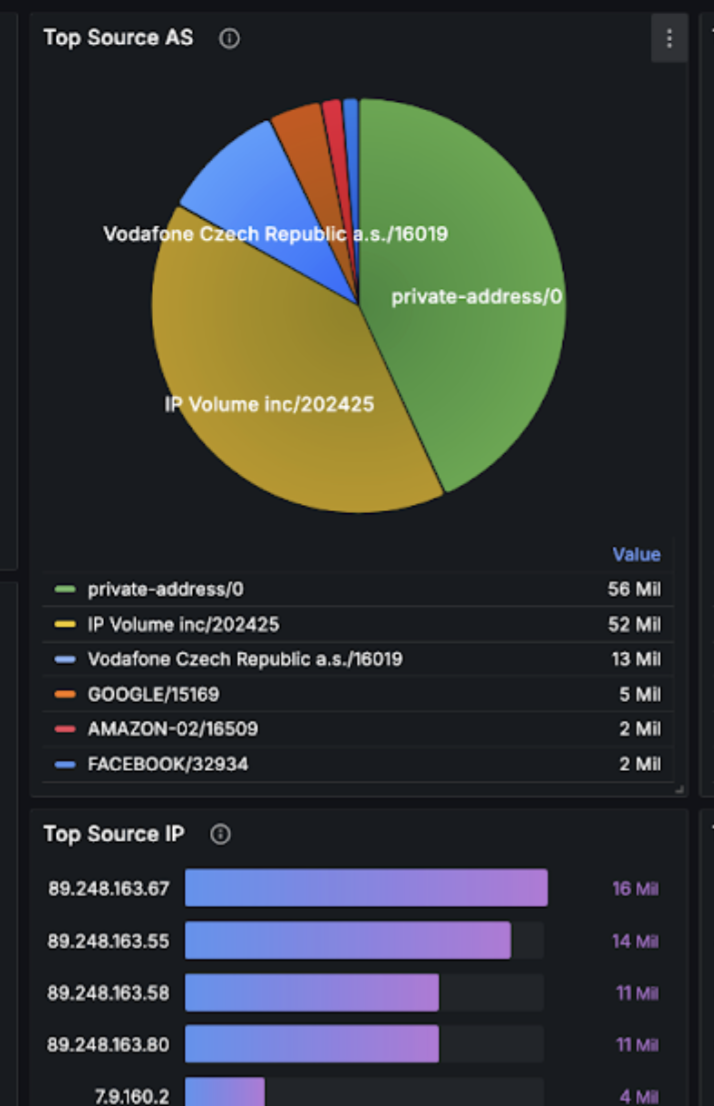

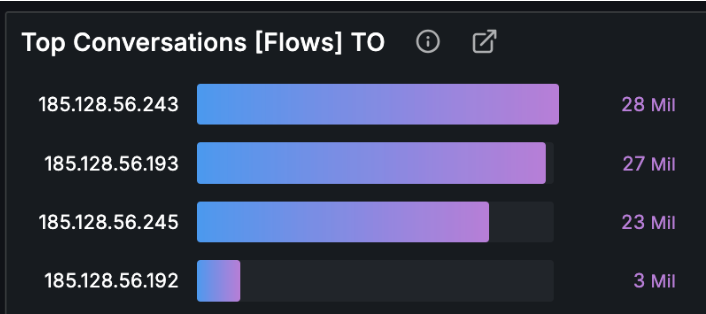

However for the given attack it targeted few IP addresses.

The whole bandwidth was saturated, so the operator couldn’t simply solve the situation by dropping packets to four IPs above on the firewall / edge router.

Instead, ISP mitigated the attacks by using BGP communities of upstream providers so that traffic to target IPs won’t even be routed to the ISP’s network.

Resources

- Netflow analysis in Grafana

- Flow-based (D)DoS detection

- Mitigation using BGP

Takeaway

ISP under distributed attack for 2 days used netflow data to identify attack vectors. By analyzing in FLOWCUTTER they obtained necessary information to neutralize damage and get rid of outages for its customers.